Binary vs Hexadecimal

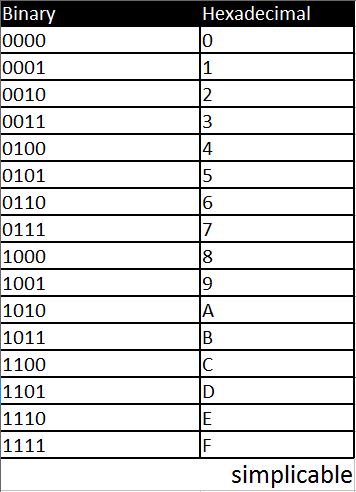

Computers can only think in binary. All digital information is stored and processed as binary. There is a computer science culture of using hexadecimal for a wide range of computing structures and systems. The machines themselves don't speak hexadecimal but represent it as binary.The main reason that hexadecimal comes up in computing is because it maps nicely to binary as follows. This mapping is so nice that computers are typically designed to display binary as hexadecimal. Most computers will never display binary such as "0101" on a screen but instead will display the hexadecimal equivalent "5." As such, hardware designers tend to think in hexadecimal and design systems based on it. For example, it is common for memory addresses to be based on hexadecimal.

This mapping is so nice that computers are typically designed to display binary as hexadecimal. Most computers will never display binary such as "0101" on a screen but instead will display the hexadecimal equivalent "5." As such, hardware designers tend to think in hexadecimal and design systems based on it. For example, it is common for memory addresses to be based on hexadecimal. | Binary vs Hexadecimal | ||

Binary | Hexadecimal | |

Definition | A number system based on two symbols typically denoted 0 and 1. | A number system based on sixteen symbols typically denoted 0,1,2,3,4,5,6,7,8,9,A,B,C,D,E,F. |

Computing Significance | The basis of all data processing and storage. | Computer scientists and hardware designers tend to think in hexadecimal. As such, hardware, systems and standards are often based on hexadecimal numbers. |

Human Readability | Considered completed unreadable as humans quickly tire of looking at the same symbols repeated over and over. | Hexadecimal is shorter than binary by a factor of 4. It also has far more variation with 16 symbols that make it feel more like a natural language. |