Existential Risk

The potential for certain types of AI such as recursive self-improvement to develop malicious, unpredictable or superintelligent features that represent a large scale risk.Privacy

The potential for technology such as sentiment analysis to monitor human communication has broad implications for privacy rights. For example, a government could monitor practically all electronic communications for attitudes, emotions and opinions using AI techniques.Quality Of Life

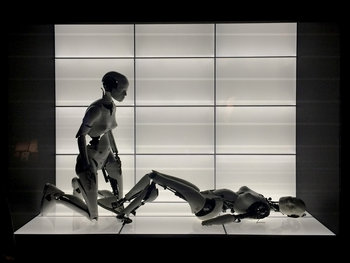

The potential for artificial intelligence to be used in ways that decrease quality of life. For example, a nursing home that cares for elderly patients using robot nurses that provide no human contact.Single Point Of Failure

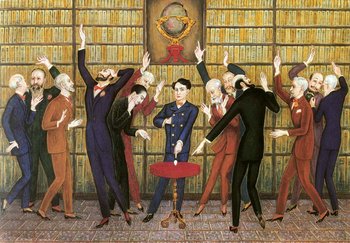

Replacing diverse human decisions with a handful of algorithms may represent a single point of failure. For example, if 40% of self driving cars were operated with a common operating system a bug in a software update could cause mass accidents.Weaponization

The potential for weaponization of artificial intelligence such as swarm robots that involve machines making decisions to harm humans.| Overview: Artificial Intelligence Risks | ||

Areas | ||

Definition | The potential for losses related to the use of artificial intelligence. | |

Related Concepts | ||