An algorithm scores credit applications based partially on zip-code where this maps to the demographics of an area.

An AI was trained using American English sources and assigns a low quality rating to other variants of English such as British or Australian.

A bug in a banking system rejects credit card applications for people who listed their occupation as retired when in fact this occupation is supposed to be eligible.

A CAPTCHA security challenge that blocks access to digital resources is based on cultural references or situations that are unfamiliar to some people based on their background, age or nationality.

An AI used to screen job applicants begins to prefer certain action verbs such as "executed" that were more commonly used on the resumes of men.

An AI decides that applicants with certain last names tend to score higher in performance reviews and begins to assign a higher preference to applications based on name.

An AI incorrectly views advanced English grammar as incorrect because it was rare in training data.

An AI incorrectly views relatively obscure terms as misspellings of common terms when in fact they are valid terms.

An algorithm is designed to reflect the biases of a society, organization or individual. For example, a job screening algorithm that immediately expedites and promotes applications that mention an institution such as top university. If this university were dominated by the upper class, it could be argued that this algorithm is biased against the middle and working class.

It is possible for algorithms to implement known cognitive biases. For example, a financial model that calculates financial returns using companies currently in an index without considering firms that dropped out of the index or that went bankrupt. This is an example of survivorship bias.

A sampling bias by AI such that training data doesn't represent an intended population properly. For example, sampling full time workers to model the health of all people. This would be biased because unhealthy people may be less likely to be working.

AI that discovers correlations in data that proceeds to use this much like a cause-and-effect relationship. This is a known cognitive bias in humans whereby people incorrectly think a pattern is meaningful when it is in fact meaningless. For example, if your boss is in a bad mood every time you eat toast for breakfast -- it is incorrect to think that you eating toast is the cause of your boss's moods. AI tends to work in a heuristic way based on correlations found in training data. As such, this type of bias may be common.

AI may model things based on factors that are heavily correlated with identity such as gender, ethnicity and age. For example, an ecommerce AI that finds that people who buy product A are less price sensitive and are willing to accept higher prices for products generally. If product A were a product that's almost exclusively purchased by women, this model may be effectively biased against women whereby it may charge groups of women more based on their predicted price sensitivity.

Principle of Human Accountability

The principle of human accountability is the idea that humans remain responsible for all decisions, scores and actions of an organization. This would require organizations to understand the systems they are implementing such that they are able to explain in plain language how such systems score things and make decisions.Notes

Each of the examples above are hypothetical and designed to be illustrative.| Overview: IT Bias | ||

Type | ||

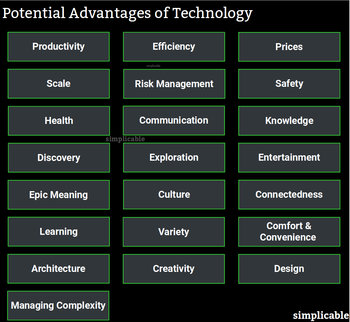

Definition | Patterns of illogical or unfair decisions, actions and scores generated by software. | |

Types | Algorithmic biasesAI BiasesSoftware Defects | |

Related Concepts | ||